regularization machine learning example

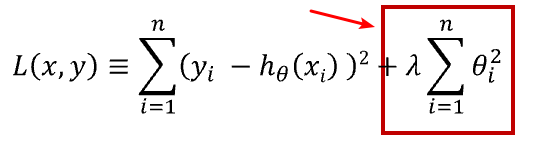

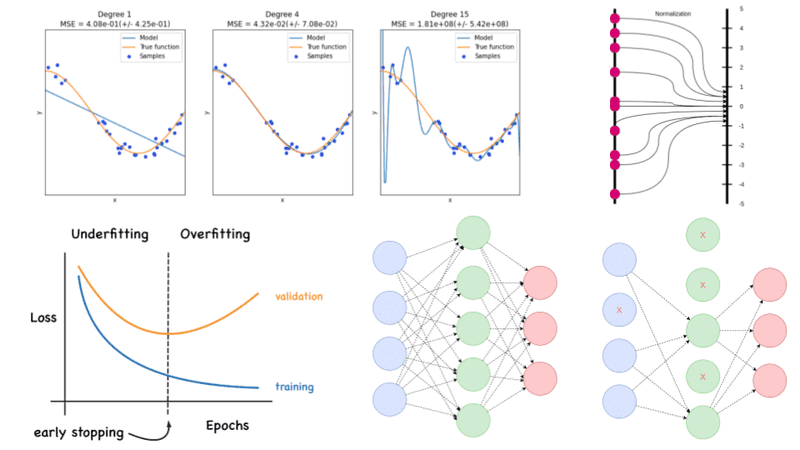

Welcome to this new post of Machine Learning ExplainedAfter dealing with overfitting today we will study a way to correct overfitting with regularization. Regularization for linear models A squared penalty on the weights would make the math work nicely in our case.

Regularization In Machine Learning Geeksforgeeks

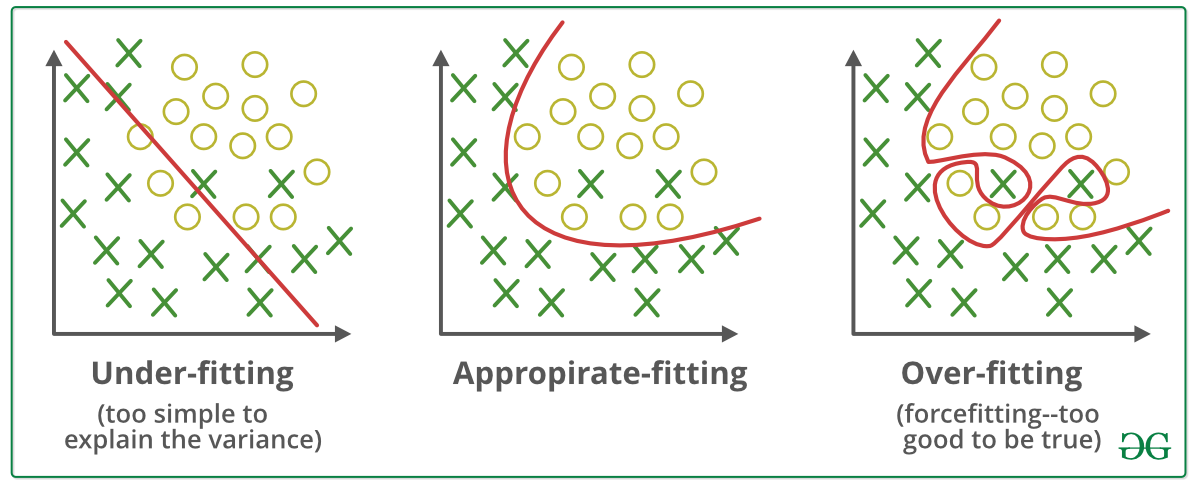

It is a technique to prevent the model from overfitting by adding extra information to it.

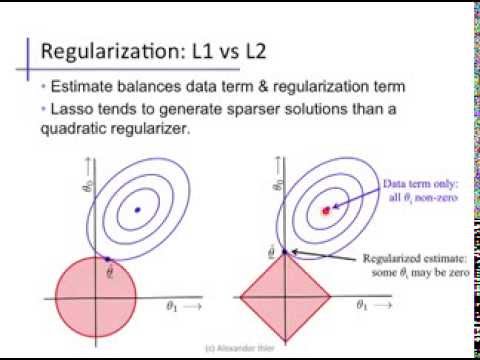

. Now returning back to our regularization. A regression model that uses L1 regularization technique is called Lasso Regression and model which uses L2 is called Ridge Regression. The term regularization refers to a set of techniques that regularizes learning from particular features for traditional algorithms or neurons in the case of neural network algorithms.

J Dw 1 2 wTT Iw wT Ty yTw yTy Optimal solution obtained by solving r wJ Dw 0 w T I 1 Ty. This technique discourages learning a. This penalty controls the model complexity - larger penalties equal simpler models.

You can refer to this playlist on Youtube for any queries regarding the math behind the concepts in Machine Learning. One of the major aspects of training your machine learning model is avoiding overfitting. Regularization is a technique used to reduce the errors by fitting the function appropriately on the given training set and avoid overfitting.

Keep all the features but reduce. The model will have a low accuracy if it is overfitting. A regression model which uses L1 Regularization technique is called LASSO Least Absolute Shrinkage and Selection Operator regression.

L1 regularization or Lasso Regression. How well a model fits training data determines how well it performs on unseen data. Regularization is one of the most important concepts of machine learning.

We can regularize machine learning methods through the cost function using L1 regularization or L2 regularization. Regularized cost function and Gradient Descent. This article focus on L1 and L2 regularization.

Kwk 1 s convex problem polytime but expensive solution 2 LASSO MAP learning with Laplacian prior Pw j 1 2b. 1 2 w yTw y 2 wTw This is also known as L2 regularization or weight decay in neural networks By re-grouping terms we get. Sometimes the machine learning model performs well with the training data but does not perform well with the test data.

Cost Functioni1n yi- 0-iXi2j1nj2. The key difference between these two is the penalty term. Poor performance can occur due to either overfitting or underfitting the data.

Regularization is a form of regression that regularizes or shrinks the coefficient estimates towards zero. Regularization in Machine Learning. It adds an L2 penalty which is equal to the square of the magnitude of coefficients.

By Suf Dec 12 2021 Experience Machine Learning Tips. Regularization adds a penalty on the different parameters of the model to reduce the freedom of the model. Ridge regression adds squared magnitude of coefficient as penalty term to the loss function.

Hence the model will be less likely to fit the noise of the training data The post Machine. Overfitting is a phenomenon where the model. You will learn by.

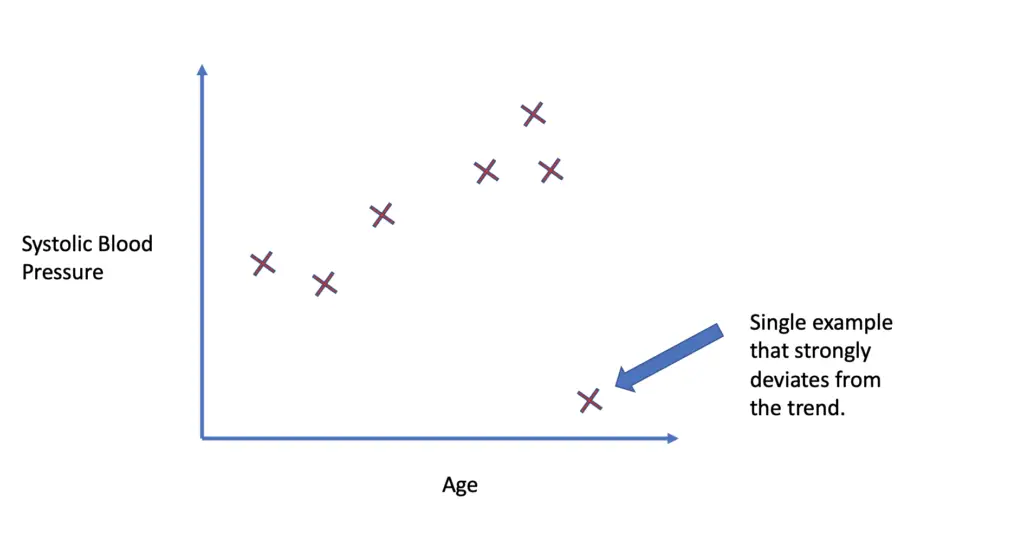

By noise we mean the data points that dont really represent. Overfitting is a phenomenon that occurs when a Machine Learning model is constraint to training set and not able to perform well on unseen data. 1 regularization LASSO wˆ argmin w YXwTYXwλkwk 1 where λ 0 and kwk 1 P D j1 w j Looks like a small tweak but makes a big difference.

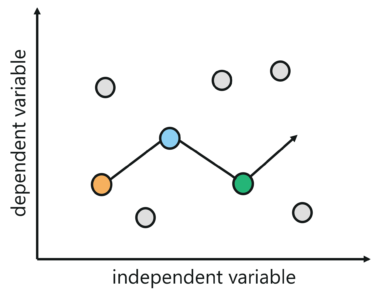

For example there exists a data set that increased linearly initialy and then saturates after a point. It normalizes and moderates weights attached to a feature or a neuron so that algorithms do not rely on just a few features or neurons to predict the result. For example a linear model with the following weights.

W 1 2 w 2 2 w 3 2 w 4 2 w 5 2 w 6 2. Ie X-axis w1 Y-axis w2 and Z-axis J w1w2 where J w1w2 is the cost function. 004 025 25 1 00625 05625.

For example Lasso regression implements this method. L1 regularization adds an absolute penalty term to the cost function while L2 regularization adds a squared penalty term to the cost function. For example Ridge regression and SVM implement this method.

Regularization machine learning example Thursday April 14 2022 Edit. The Ridge regularization technique is especially useful when a problem of multicollinearity exists between the independent variables. Figure from Machine Learning and Pattern Recognition Bishop.

Overfitting Empirical loss and expected loss are different Smaller the data set larger the difference between the two Larger the hypothesis class easier to find a hypothesis that fits the. You can also reduce the model capacity by driving various parameters to zero. It means the model is not able to predict the output when.

W 1 02 w 2 05 w 3 5 w 4 1 w 5 025 w 6 075 Has an L2 regularization term of 26915. A regression model. The simple model is usually the most correct.

When the contour plot is plotted for the above equation the x and y axis represents the independent variables w1 and w2 in this case and the cost function is plotted in a 2D view. In machine learning regularization problems impose an additional penalty on the cost function. This allows the model to not overfit the data and follows Occams razor.

Regularization helps to solve the problem of overfitting in machine learning. If a univariate linear regression is fit to the data it will give a straight line which might be the best fit for the given training data but fails to recognize the saturation of the curve. L2 regularization or Ridge Regression.

Using cross-validation to determine the regularization coefficient. Ridge Regression also called Tikhonov Regularization is a regularised version of Linear Regression a technique for analyzing multiple regression data. Regularization will remove additional weights from specific features and distribute those weights evenly.

1 No more closed-form solution use quadratic programming minwYXwTYXw st. Concept of regularization. This happens because your model is trying too hard to capture the noise in your training dataset.

02 2 05 2 5 2 1 2 025 2 075 2. Sometimes the machine learning model performs well with the training data but does not perform well with the test data. From the above expression it is obvious how the ridge regularization technique results in shrinking the magnitude of coefficients.

This video on Regularization in Machine Learning will help us understand the techniques used to reduce the errors while training the model. The general form of a regularization problem is. Let us understand how it works.

Regularization is a technique to reduce overfitting in machine learning. It adds an L1 penalty that is equal to the absolute value of the magnitude of coefficient or simply restricting the size of coefficients. Regularization helps the model to learn by applying previously learned examples to the new unseen data.

Difference Between L1 And L2 Regularization Implementation And Visualization In Tensorflow Lipman S Artificial Intelligence Directory

What Is Regularization In Machine Learning Quora

Regularization In Machine Learning Regularization In Java Edureka

L2 Vs L1 Regularization In Machine Learning Ridge And Lasso Regularization

Regularization In Machine Learning Programmathically

Intuitive And Visual Explanation On The Differences Between L1 And L2 Regularization

Linear Regression 6 Regularization Youtube

Introduction To Regularization Methods In Deep Learning By John Kaller Unpackai Medium

Regularization Of Linear Models With Sklearn By Robert Thas John Coinmonks Medium

Difference Between L1 And L2 Regularization Implementation And Visualization In Tensorflow Lipman S Artificial Intelligence Directory

L1 And L2 Regularization Youtube

Understand L2 Regularization In Deep Learning A Beginner Guide Deep Learning Tutorial

Regularization Part 1 Ridge L2 Regression Youtube

Regularization In Machine Learning Connect The Dots By Vamsi Chekka Towards Data Science

Regularization Machine Learning Know Type Of Regularization Technique

Which Number Of Regularization Parameter Lambda To Select Intro To Machine Learning 2018 Deep Learning Course Forums

Regularization Techniques For Training Deep Neural Networks Ai Summer

Regularization In Machine Learning Regularization In Java Edureka